Understanding Docker Container ecosystem

Sachin Verma

in docker containerResource sharing has always remained a key focus of researchers ever since the first computing devices were created. It is now that it has become ubiquitous in different segments of computing. Linux containers is one amongst those resource sharing technologies that has enabled a revolution of sorts in the computing world. Modern servers are beasts which can run multiple Operating Systems simultaneously making use of advances in hardware assisted Virtualization technologies. You can spin up hundreds of headless virtual machine instances on a single high end physical server. But, even such concurrent use is not enough to achieve high utilization of these servers.

Modern Service oriented architecture of applications sometimes require hundreds of services running on behalf of an application. Running a bunch of services with appropriate scalability and redundancy requires that we can start/stop these services quickly. Running a full Operating System instance on a virtual machine does not help this cause as it is too heavy for the task at hand.

Also, as most of the computing infrastructure moves to the cloud, we are more than ever concerned about utilization and eventually the cost we are paying for the infrastructure. Docker technology addresses these concerns by providing the ability to run instances of your application in a portable and scalable manner while ensuring high efficiency.

What is Docker?

- Docker is a container engine technology which powers modern

microservicesbased application design. At its core, Docker uses some cool Linux technology. Docker uses resource isolation primitives in *nix operating systems. Essentially, the technology makes use ofcgroups(control groups) andnamespacesto create containers which have their own filesystem, network and processes. There is noinitprocess in the booted containers and it is up to the application writer to manage multiple processes. You could for instance make use ofsupervisordto manage multiple services/processes. But, in essence this is not the way a docker container should be written. There should ideally be one service per container in order to achieve scalability and ease the application design. A container would stop running as soon as the process which started after the booting finishes or dies.

Why Use a Docker and not a Virtual Machine?

There are a number of situations where using a docker makes sense compared to Virtual Machines(VM). One of the primary motivation of using docker is that we want a mechanism where we can package, deploy and run applications in same environment everytime, everywhere. Any developer can attest to the fact that how frustating it is to solve the dependency mess when we migrate application from one platform to another. Different applications running on same platform at times have different dependencies and upgrading one dependency will break the other application. A significant amount of time is wasted doing this arduous exercise. Containers address this problem by keeping things simple. User typically has only single service running in a container and hence keeping tab on dependency mess.

The boot time of a container is lightning fast and usually does not exceed few seconds. This is a huge advantage compared to VM which take a lot of time booting up and starting all the operating system services. A container does not need to do this overhead work as it is piggybacking on the host kernel which is doing all the work for it. A container gets the required isolation as if it were runnning alone on the hardware with root privileges on it's filesystem.

Applications can run inside a container with root privileges and do whatever they want without affecting the host on which they are running. Whereas on a VM, if an application were to be given root privileges and if it misbehaves then it can cause damage to the host running it. With docker, you can always restart a misbehaving container without any issues.

Key Components of Docker

Unfortunately like many other technologies, the lingo used to describe and explain Docker ecosystem is too wide and confusing at times. I have tried to list down the important components of Docker in a short description below.

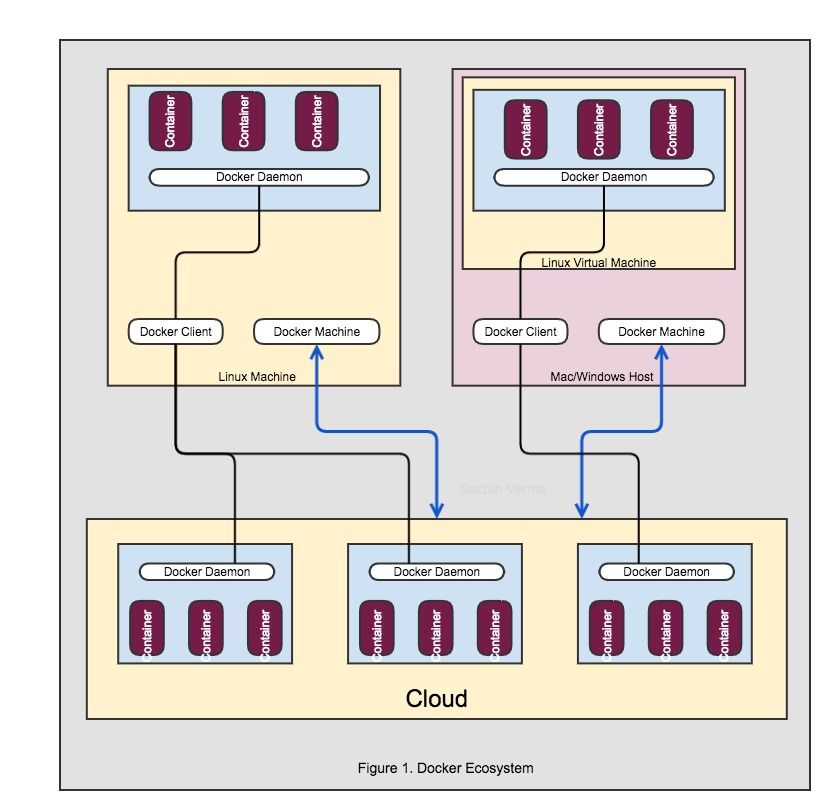

Docker Host

Machine which runs containers is called a Docker host. The operating system on docker host is usually some flavor of Linux operating system (

coreOSis one of the most popular ones around) which has been tuned to run containers . Other hosts like Macs and windows make use of Hypervisors likeVirtualbox,Hyper-Vetc to spin up a virtual Linux host which acts as the Docker host on that machine.A Docker host has all the required kernel support and the docker runtime libraries like

libcontainer,runcetc. which enable the docker engine to create isolated containers for the application's services.

Docker Engine

- Docker Engine is the runtime required to create, run and manage containers on a docker host. It is a daemon which can be controlled by REST like API.

libcontainerwhich was the original runtime from the docker (the company) has been repackaged asruncafter open container specification was defined.

Docker client

- Docker client is a client side utility which communicates with a docker host and gives it commands to build, deploy, run or stop containers. Docker clients are available natively for Mac and Windows as well with tools like 'Docker for Mac' and 'Docker for Windows'

Dockerfile

- Dockerfile is a blueprint of instructions used by docker to build container images. You can specify which image would be your base container image and docker would fetch it from docker registry and build upon it. The instructions specify the rules to build it further. Each

RUNinstruction which can be used to add some package to the image would create another layer in the container image.

Docker compose

- It is a tool using which you can configure your applications services and manage their dependencies running across different containers. yaml format is used to write the compose file.

Copy on Write Storage drivers

- One of the key components of the docker technology is the

copy on writestorage drivers. Storage systems likeAUFS(Another Union File system) allow two file systems to be superimposed on top of each other such that a user can see the contents of both the filesystems. This is a great design choice and is one of the primary reasons for such low boot times of Containers. AUFS offers copy on write at a filesystem level. There are other storage drivers which offer finer granularity, with copy on write at block level. Presence of such storage drivers allows docker to create layered stack of docker images one on top of another and hashed like a git commit. Docker keeps most of the image layers as read only and creates a new layer for the container as it is modified. This architecture saves a lot of space on a docker host as multiple containers share some common layers that comprise their image amongst themselves.

Networking

- Another key requirement of distributed applications and especially for containers making use of service oriented architecture is the networking. Docker engine creates one ethernet interface and one loopback interface for each container and links all these interfaces from different containers on same host in to a bridge ( called

docker0) which can then be connected with the physical network interface of the docker host. Thus allowing external network access for the containers.

How and where can i install docker ?

- Docker can be deployed on all platforms which are either Linux based (with support for

cgroupsandnamespaces) or they can run a hypervisor like virtualbox, Hyper-V etc., which can run a docker capable Linux operating system. Many of the non-Linux platforms make use ofvagrantto spin up Linux host using virtualbox. Docker can be easily deployed on cloud as there are a number of tools available which facilitate running containers on clouds like Digital Ocean, Google compute, AWS and Azure etc. There is a toolbox calleddocker-machinewhich is installed on clients with docker and facilitates launching, stopping, destroying containers on a cloud platform where the docker engine is running. I will write about installing and running docker on different platforms in future blog posts.

Challenges for Docker

Like all technologies, Docker has some challenges and growth areas.

Container Orchestration

Containers have made implementation of distributed microservices a reality. But to achieve scalability and resilience of the applications, it is important that we are able to manage the individual services of an application. These services may be running on a same docker host or mutiple hosts. There might me situation where two services running on two separate containers require that they should be on same docker host. Such complexities pose a great challenge for the correct development and deployment of distributed applications.

There are container orchestration frameworks around which help tackle this problem. Prominent among those are

kubernetes(a container orchestration framework developed by Google) andDocker swarm. The role of these frameworks is to facilitate communication required by these containers so that the application can perform as defined. These frameworks offer a lot of value added features like an application upgrade where the various services for an application can be seamlessly upgraded to a new version without the users experiencing any downtime. Application writers can easily scale up or scale down their services by configuring the replicators offered by these frameworks.Often, there is a need to create an

overlay networkconnecting different docker hosts so that the containers running on one docker host can communicate with the one running on a different Docker host. There might be a need of service discovery in the application. For these scenarios we can use solutions likeetcdorzookeeper.

security

- Container security is an important question because if a single service of an application is compromised then it can bring down the whole application. To address this concern most commercial container deployments make use of lean Linux distributions like alpine, coreOS etc and further tailor those images for the service needs by hardening it. If we have less code/packages to monitor for vulnerabilities, we can be more confident about securing our applications (Although secure applications is a myth :) ).

Looking Ahead

- The rise of service oriented architecture and cloud computing has catapulted docker into the forefront of distributed application development. Already there has been a huge momentum in the number of people interested in using docker for their platforms. The main thrust in future would be on container security and the development of container orchestration frameworks like

kubernetesanddocker swarmas these frameworks make it easy to deploy and manage containers. Also, there is a lot of activity for container management on hybrid cloud environment.

← previous: Python Package Management next: Running Docker on Mac →